Exploring Amazon EKS: Workload Scheduling, Deploying Sample Applications, and Scaling

Introduction

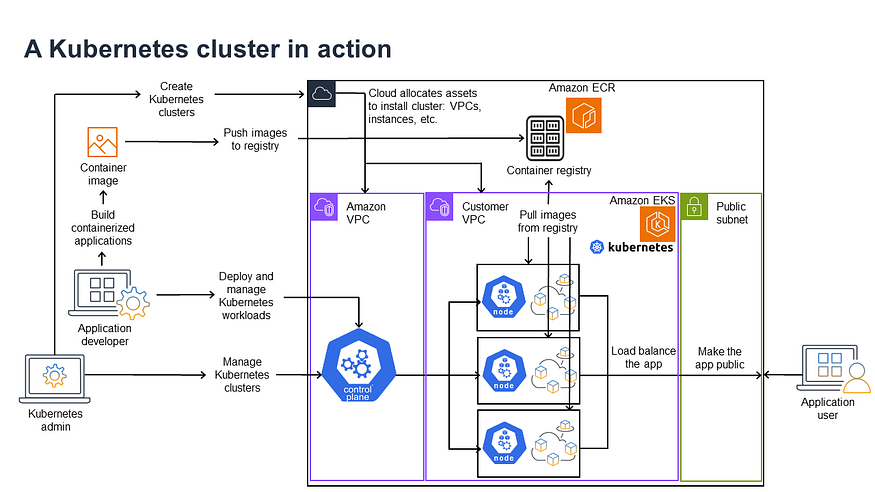

Amazon Elastic Kubernetes Service (EKS) is a managed Kubernetes offering designed to facilitate seamless deployment and operation of Kubernetes clusters on AWS infrastructure, eliminating the need for users to set up and manage Kubernetes control planes and nodes independently. This comprehensive exploration will provide insights into workload scheduling, deploying exemplar applications, and effectively scaling applications within the Amazon EKS environment.

Prerequisites for Amazon EKS Setup

Before initiating the setup of your EKS cluster, please ensure the following prerequisites are met:

AWS Account: You must possess an active AWS account capable of creating and overseeing EKS clusters.

AWS CLI: Install and configure the AWS Command Line Interface (CLI). Detailed instructions can be found in the official AWS CLI installation guide.

kubectl: Install kubectl, the command-line tool essential for interacting with Kubernetes clusters. Refer to the official kubectl installation guide for guidance.

eksctl: Install eksctl, a straightforward command-line utility used for creating and managing EKS clusters. Refer to the eksctl installation guide for detailed instructions.

IAM Permissions: Ensure that your AWS Identity and Access Management (IAM) account possesses the requisite permissions to create EKS clusters, manage nodes, and configure networking. Typically, this necessitates permissions equivalent to

AdministratorAccess.

By ensuring these prerequisites are met beforehand, you will be well-prepared to successfully set up and manage your Amazon EKS cluster.

Step 1: Setting Up EKS Cluster

Before we delve into scheduling and deploying applications, let’s quickly set up an EKS cluster via eksctl.

- Create EKS Cluster:

eksctl create cluster --name my-eks-cluster --region ap-northeast-1 --nodegroup-name standard-workers --node-type t2.micro --nodes 2

- Update kubeconfig:

aws eks --region ap-northeast-1 update-kubeconfig --name my-eks-cluster

Step 2: Scheduling Workloads

In Kubernetes, workloads are encapsulated within Pods, the fundamental deployable units. Kubernetes employs a scheduler to allocate Pods to nodes according to specified criteria and resource demands.

Pod Scheduling Example

Let’s create a basic Pod definition and explore scheduling:

- Create a Pod Manifest (nginx-pod.yaml):

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

- Apply the Manifest:

kubectl apply -f nginx-pod.yaml

- View the Pod:

kubectl get services

The Service of type LoadBalancer will create an AWS Elastic Load Balancer and expose your application to the internet.

Step 3: Scaling Application

Scaling in Kubernetes can be horizontal (adding more Pods) or vertical (adding more resources to existing Pods). Here, we’ll focus on horizontal scaling.

Manual Scaling

- Scale Deployment Manually:

kubectl scale deployment/nginx-deployment --replicas=5

2. Verify Scaling:

kubectl get deployments

kubectl get pods

Autoscaling

Kubernetes also supports Horizontal Pod Autoscaler (HPA) to scale your applications automatically based on CPU utilization or other metrics.

- Enable Metrics Server:

kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

2. Create HPA Manifest (nginx-hpa.yaml):

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: nginx-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: nginx-deployment

minReplicas: 2

maxReplicas: 10

targetCPUUtilizationPercentage: 70

3. Apply the HPA:

kubectl apply -f nginx-hpa.yaml

The HPA will now monitor the CPU utilization of your Pods and scale the number of replicas between the specified minimum and maximum limits.

Step 4: Delete EKS

To delete the Amazon EKS cluster and its associated resources that you created using eksctl, you can use the following command:

eksctl delete cluster --name my-eks-cluster --region ap-northeast-1

Conclusion

Amazon EKS streamlines the deployment of Kubernetes on AWS, offering a robust and scalable platform for containerized applications. In this blog post, we delved into workload scheduling, deployed a sample NGINX application, and demonstrated scaling capabilities through manual and automated Horizontal Pod Autoscaling (HPA). Armed with these foundational skills, you are equipped to effectively oversee your workloads on Amazon EKS.