Volumes

By default, container data is stored inside own its file system.

Containers are ephemeral in nature.

When they are destroyed, the data inside them gets deleted.

Also, when running multiple containers in a Pod it is often necessary to share files between those Containers.

In order to persist data beyond the lifecycle of the pod, Kubernetes provide volumes.

A volume can be thought of as a directory that is accessible to the containers in a pod.

The medium backing a volume and its contents are determined by the volume type.

Types of Kubernetes Volumes

There are different types of volumes you can use in a Kubernetes pod:

❑ Node-local memory (emptyDir and hostPath)

❑ Cloud volumes (e.g., awsElasticBlockStore, gcePersistentDisk, and azureDiskVolume)

❑ File-sharing volumes, such as Network File System (NFS)

❑ Distributed-file systems (e.g., CephFS and GlusterFS)

❑ Special volume types such as PersistentVolumeClaim, secret, configmap and gitRepo

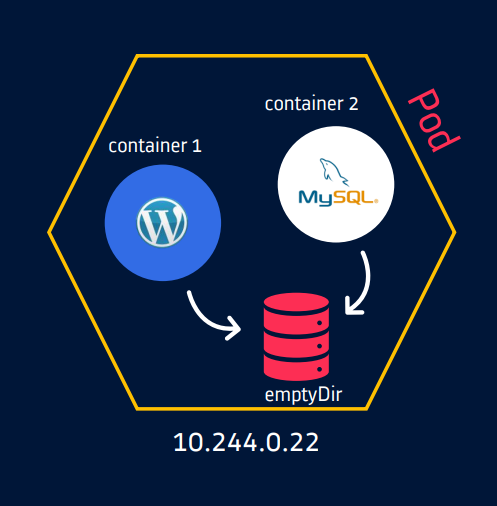

emptyDir

emptyDir volume is first created when a Pod is assigned to a Node.

It is initially empty and has same lifetime of a pod.

emptyDir volumes are stored on whatever medium is backing the node — that might be disk or SSD or network storage or RAM.

Containers in the Pod can all read and write the same files in the emptyDir volume.

This volume can be mounted at the same or different paths in each Container.

When a Pod is removed from a node for any reason, the data in the emptyDir is deleted forever.

Mainly used to store cache or temporary data to be processed.

Let’s Dive into the Practical:

Create “emptyDir-demo.yml”

apiVersion: v1

kind: Pod

metadata:

name: emptydir-pod

labels:

app: busybox

purpose: emptydir-demo

spec:

volumes:

- name: cache-volume

emptyDir: {}

containers:

- name: container-1

image: busybox

command: ["/bin/sh", "-c"]

args: ["date >> /cache/date.txt; sleep 1000"]

volumeMounts:

- mountPath: /cache

name: cache-volume

- name: container-2

image: busybox

command: ["/bin/sh", "-c"]

args: ["cat /cache/date.txt; sleep 1000"]

volumeMounts:

- mountPath: /cache

name: cache-volume

To apply run this command:

kubectl apply -f emptyDir-demo.yml

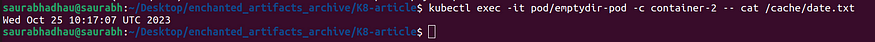

Run this command:

kubectl exec -it pod/emptydir-pod -c container-2 -- cat /cache/date.txt

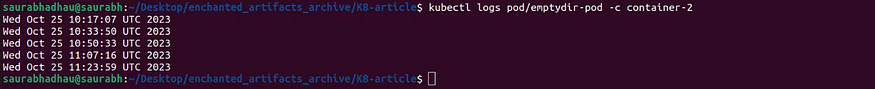

Run this command to see logs:

kubectl logs pod/emptydir-pod -c container-2

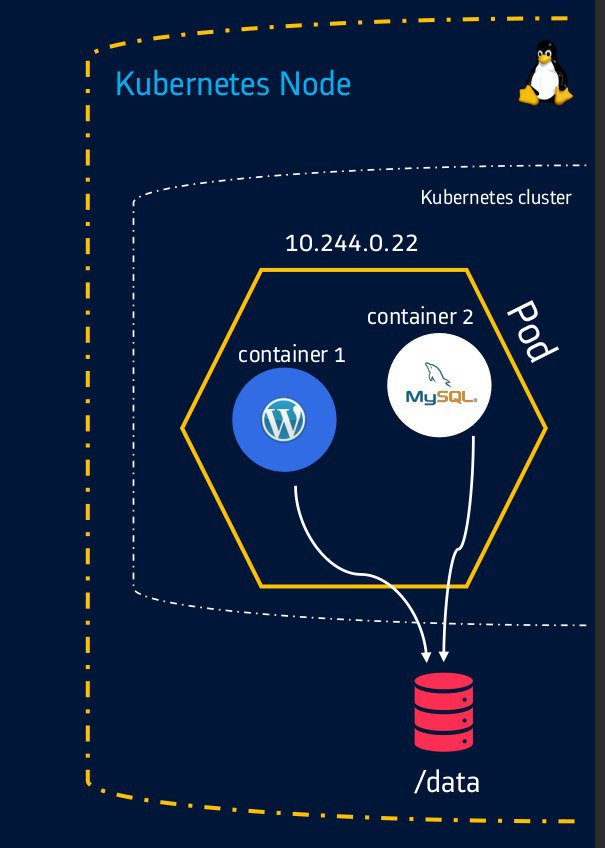

This type of volume mounts a file or directory from the host node’s filesystem into your pod.

hostPath directory refers to directory created on Node where pod is running.

Use it with caution because when pods are scheduled on multiple nodes, each nodes get its own hostPath storage volume. These may not be in sync with each other and different pods might be using a different data.

Let’s say the pod with hostPath configuration is deployed on Worker node 2. Then host refers to worker node 2. So any hostPath location mentioned in manifest file refers to worker node 2 only.

When node becomes unstable, the pods might fail to access the hostPath directory and eventually gets terminated.

Let’s Dive into the Practical:

Create “hostPath-demo.yml”:

apiVersion: v1

kind: Pod

metadata:

name: hostpath-pod

spec:

volumes:

- name: hostpath-volume

hostPath:

path: /data

type: DirectoryOrCreate

containers:

- name: container-1

image: busybox

command: ["/bin/sh", "-c"]

args: ["ls /cache ; sleep 1000"]

volumeMounts:

- mountPath: /cache

name: hostpath-volume

Run this command:

kubectl apply -f hostPath-demo.yml

You can see the new pod is running. Now let’s talk about Persistent Volume and Persistent Volume Claim.

Persistent Volume and Persistent Volume Claim

Managing storage is a distinct problem inside a cluster. You cannot rely on emptyDir or hostPath for persistent data.

Also providing a cloud volume like EBS, AzureDisk often tends to be complex because of complex configuration options to be followed for each service provider.

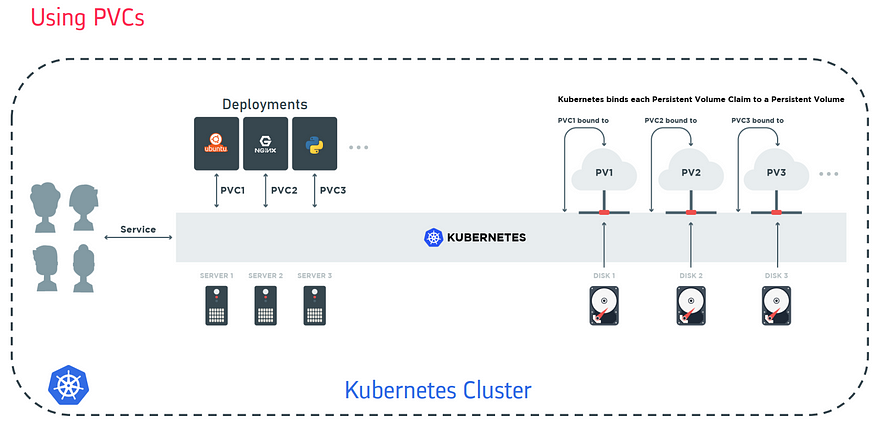

To overcome this, PersistentVolume subsystem provides an API for users and administrators that abstracts details of how storage is provided from how it is consumed. To do this, K8s offers two API resources: PersistentVolume and PersistentVolumeClaim.

Persistent volume (PV)

A PersistentVolume (PV) is a piece of storage in the cluster that has been provisioned by an administrator or dynamically provisioned using Storage Classes(pre-defined provisioners and parameters to create a Persistent Volume).

Admin creates a pool of PVs for the users to choose from

It is a cluster-wide resource used to store/persist data beyond the lifetime of a pod.

PV is not backed by locally-attached storage on a worker node but by networked storage system such as Cloud providers storage or NFS or a distributed filesystem like Ceph or GlusterFS.

Persistent Volumes provide a file system that can be mounted to the cluster, without being associated with any particular node.

Persistent Volume Claim (PVC)

In order to use a PV, user need to first claim it using a PVC.

PVC requests a PV with the desired specification (size, speed, etc.) from Kubernetes and then binds it to a resource (pod, deployment…) as a volume mount.

User doesn’t need to know the underlying provisioning. The claims must be created in the same namespace where the pod is created.

Persistent Volume and Persistent Volume Claim

In this exploration of Kubernetes storage, we’ve covered the versatility of Kubernetes Volumes and the seamless data management enabled by Persistent Volumes (PVs) and Persistent Volume Claims (PVCs).

With these tools at your disposal, you have the means to match the right storage to your applications, whether they’re stateless or stateful, ensuring data availability and reliability. Kubernetes empowers you to manage your storage efficiently and effectively in the dynamic world of containerized applications.

So, embrace this knowledge and keep pushing the boundaries of what you can achieve in the Kubernetes ecosystem. Happy containerizing! 🚀🐳📦